Technologies of Interbeing

How can interactive installations and other extended reality environments trouble the distinction between audience and artwork, nature and technology, self and other? Could technology help to stimulate human sensory perception so as to bring about a visceral experience of interbeing?

Including excerpts from Human Scale Systems in Responsive Environments, originally published in IEEE MultiMedia. These passages discuss FoAM’s approaches to ‘mixed reality’ technologies developed for the t*series (TGarden, txOom and trg) – interactive playspaces that embraced technical modesty to achieve experiential depth.

We cannot separate human beings from the environment. The environment is in human beings and human beings are part of the environment.

Interbeing is a concept proposed by Zen Monk Thích Nhất Hạnh, emphasising the elemental interdependence of all beings and phenomena. While rarely addressed in technological discourse, interbeing anticipates many opportunities and concerns of embodied cognition, machine learning and interaction design.

(Re)Introduction

Two decades after its first publication, this text’s exploration of responsive environments occupies an underappreciated position in technological discourse; its vision of technology as perceptual mediator rather than control system remains resonant. Consider the “coffee cup problem”: despite sub-millimetre tracking and photorealistic rendering, today’s systems still struggle with reliably anchoring virtual objects to physical surfaces – creating an immersion-breaking disconnect when a digital mug inexplicably slides through a real table. This slippage represents a fundamental limitation – despite advancing hardware capabilities, these technologies struggle with the embodied dimensions of experience. As systems evolved from room-sized CAVE projections to head-mounted displays, technological development has narrowed rather than expanded our experiential horizons, through hardware prioritising visual fidelity over embodied engagement.

Where contemporary XR (Extended Reality, an umbrella term referring to augmented reality, mixed reality, and virtual reality) often treats the body as an obstacle to overcome, the t*series environments created conditions where boundaries between self, other, nature, and technology became permeable. These environments anticipated contemporary embodied interaction frameworks by treating participant deviation – the gap between designed intention and actual user behaviour – not as error, but essential to meaning-making. This approach recognises that the most meaningful interactions often emerge precisely when users engage with systems in unexpected ways, departing from predetermined paths. As contemporary designers confront the limits of control-oriented paradigms, the text's embrace of environmental indeterminacy resurfaces as radical proposition: what if responsive systems required releasing design authority rather than perfecting it?

– Justin Pickard (2025)

Every system can become smarter, efficient, and more valuable by factoring in presence information. Society’s embrace of instant messaging has shown that a great deal of importance is placed on presence-enabled contact lists and instant connectivity. Even traditional resource management systems such as automated routing use availability and presence to select and connect people to the best available agent in a call center. Multimedia conferencing takes presence information a step further and asks the question of how to connect people based on their disparate communication capabilities. But all these uses of presence information, as exciting as they are now, and as much potential to develop further as they all have, are but a tip of the iceberg of the communication revolution ahead. In the future, the wealth of presence information—and the kinds of information we are able to obtain—will change everything. —Dorée Duncan Seligmann (2005)

txOom, The Hippodrome Circus, Great Yarmouth, UK, 2002

txOom, The Hippodrome Circus, Great Yarmouth, UK, 2002

In responsive environments human participants manipulate digital media by modulating the physical environment around them, through conscious and unconscious actions (such as bodily movement, physiological responses, speech, and social interaction). As the computational environment simultaneously senses and responds to these actions, the participants become immersed in media worlds whose shape and behaviour react to their presence. The interface between the human participants and the media systems occur through networked sensing technologies that provide data from individuals, groups, and their social dynamics. The systems can analyse and use this data to drive media systems ranging from visual to sonic to tactile or even olfactory.

Wearable computing, gestural instruments, sensor networks, permaculture, and tensegrity structures can be combined in responsive environments. In these environments, human–computer interaction (HCI) and human–computer–human interaction (HCHI) relies on subtle negotiation between the human participants and the computational system, moving away from stimulus–response metaphors.

Play removes tension, which frees our minds to experiment a little longer than normal – curious to see what unexpected solutions arise from the apparent chaos.Stefan Geyer, Zen in the Art of Permaculture Design

Unlike strict rule-based gameworlds, responsive environments facilitate conversational interactions that can become free-form playspaces. These environments are composed of media, materials, and architectures that are incorporated into public spaces, creating an inter-action and inter-being between the real and imaginary, technological and biological systems.

When dealing with the physical environment and non-symbolic aspects of human interaction, many HCI methodologies relevant to screens or object-based systems can appear misleading. Conventional HCI relies on a thin data stream of bits, either as discrete characters or coordinates of a pointer moving over defined regions on a flat surface, for example, which can then be encoded, compressed, and differentiated from noise. Sensor-based input can range from the discrete (switches) to continuous (time-based data streams). Interaction in responsive environments is closer to the latter end of the sensory spectrum, where computer systems can be experienced as components of a physical reality in which action is continuous and unpredictable.

Towards immersive systems

Human senses mediate our interconnection with the world, dominated by sight and skin, tuned by sound, and enhanced by taste and smell. Our nervous system continuously analyses, modulates, and adjusts the multisensory manifold that seeps into our bodies through our senses to such a degree that we perceive ourselves as immersed in the world. Since childhood, we’ve learned to live and act in this world, and we intuitively grasp the ongoing exchange between the world and our own existence.

In responsive environments, this inherent knowledge can lead to a more intuitive, embodied interaction with systems capable of operating in a similar, continuous, immersive fashion. Hence, responsive systems should be able to sense (rather than merely detect) not just presence or absence, but the range and subtleties of human gestures and interactions.

By developing systems that behave as entities aware of their own presence and that of the people interacting with them, these systems should be able to enter into a dialogue (polylogue) and thus evolve out of the silent black- or beige-boxed observers that computer systems have become. They could expand the thin data channel of computer-mediated ‘sense’ beyond what humans are currently capable of. Allowing us to touch over distance, simultaneously see in multiple directions, or have our ‘ears’ distributed through different environments and time frames.

What if we could engage with computational systems more like we would with a living entity? Such a system can sense an aggregate of stimuli, through which it forms (or appears to have formed) a multimodal image of both the physical environment and its own internal computational state. At the intersection between the physical and the computational, the system perceives the changes caused by human participants and other physical entities (their movement, social interaction, biometric information), as well as more autonomous changes in its own simulated dynamics (such as swarms or particle systems). Furthermore, the system can interpret these changes and formulate meaningful responses—in real time—for an audience that might be overwhelmed with audiovisual stimuli and be trying to make sense of their experience.

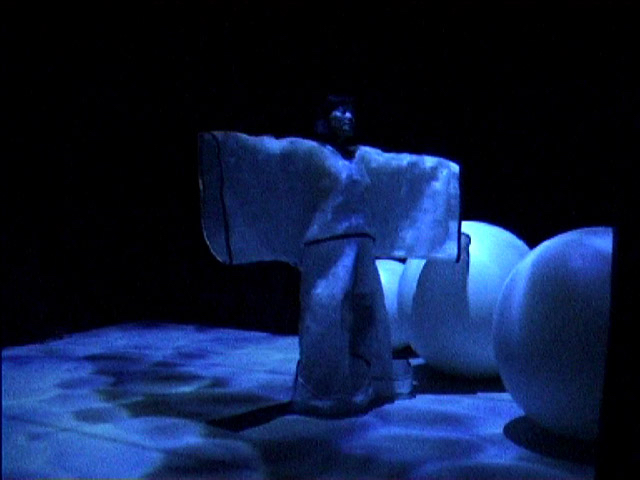

TGarden, Siggraph, New Orleans, USA, 2000

TGarden, Siggraph, New Orleans, USA, 2000

Responsive environments can be seen as phenomenological experiments for non-intentional public spaces. Spaces designed to evolve without a specific goal and to encourage a multiplicity of interactions and behaviours. The events within them are unprescribed. The human participants play and experiment individually or in groups, as if they were entering into an unknown terrain and felt free to explore. Such environments let the participants experience the effects of their individual actions on human temporal and spatial scales (in real time, at room size) through their own senses.

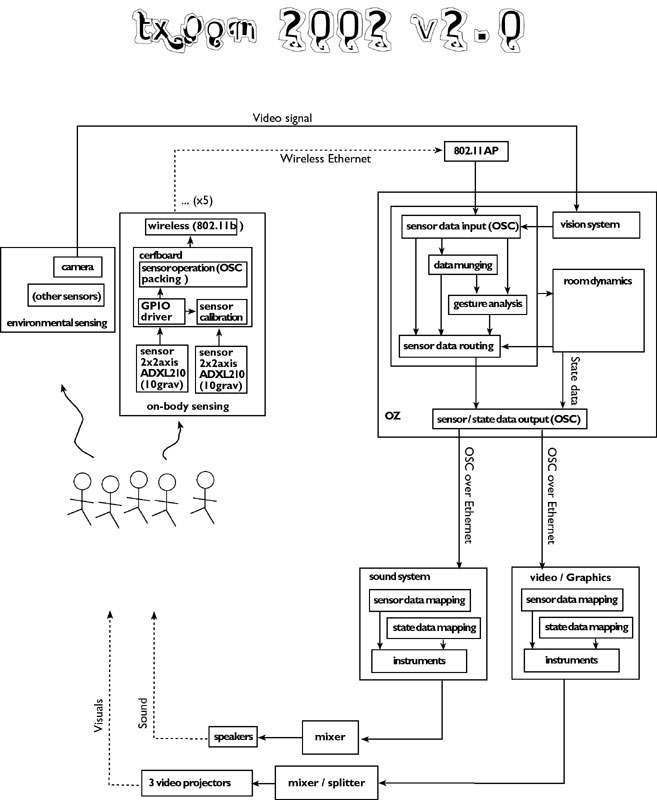

txOom systems test, La Rotonda, Torino, Italy, 2002

Perceptual technologies

When we look at form, we have to look in such a way that we can touch the nature of interbeing of form. Form is made of non-form elements. Body is made of non-body elements. Perception is made of non-perception elements. And in that way we will never get caught in duality and the idea of a self.Thích Nhất Hạnh, Interbeing and the Nature of Perception

Perception is the crucial link between the environment and any action or response beyond the most basic reflex. Most current sensor systems’ bandwidth is made up of many small, comparatively thin channels that provide a more spatially and temporarily distributed viewpoint than the fixed, high-bandwidth human sensory apparatus.

Computers are particularly good at accumulating and processing massive amounts of data (as long as it’s Shannon-type information). Information that can be measured and quantized. However, their ability to process human gestures, encode embodied motion, and form associations or act on such data is minimal and often unwieldy. Given this, any computer-based processes operating at the human scale would need to handle degrees of ambiguity, variability, and richness of activity, while also manipulating the acquired data at a much faster rate.

In TGarden, we designed a “media choreography” system that could change the states of media responses (much as a choreographer designs dancers’ movement through space), in response to raw sensor data.

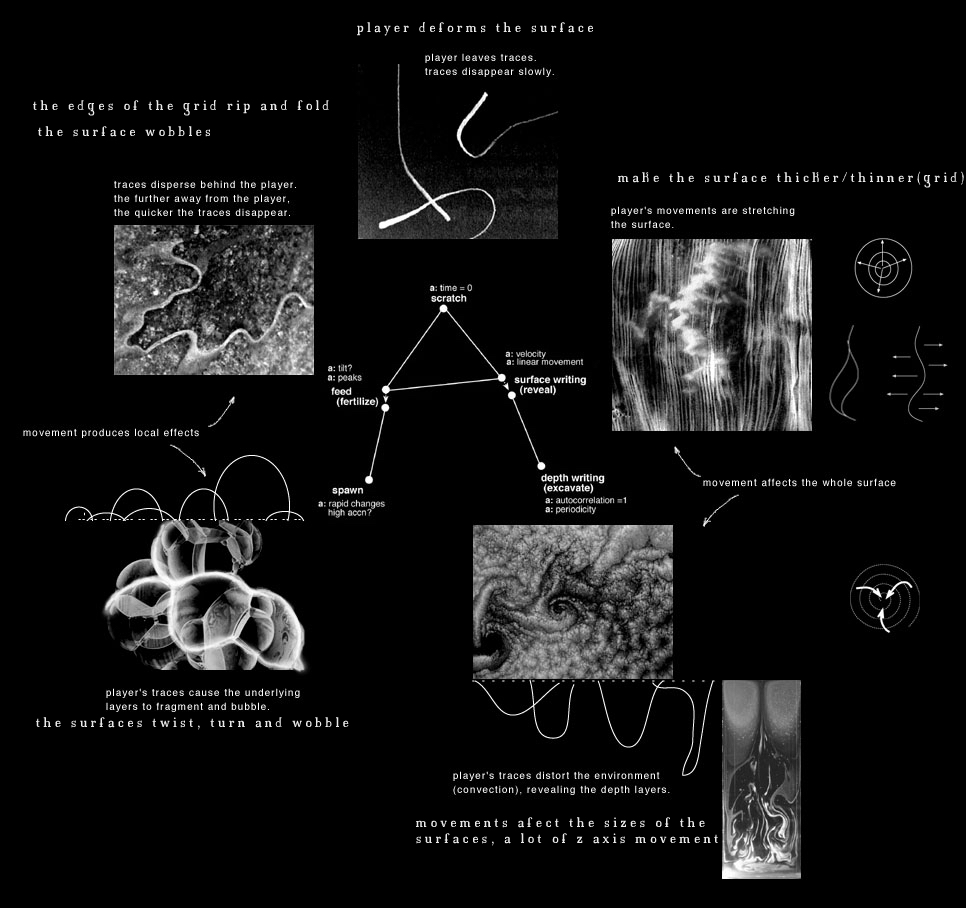

In txOom, we derived the environmental dynamics from a geometric landscape on which different media states were visualised as surface distortions. The human players were modelled in the media system as particles moving on this dynamic surface, with their sensor input modulating the particles’ trajectories.

The TRG model moves further away from state-based dynamics, constructing a media world shaped by simulated forces that are assigned to particular qualities of the participant’s actions. We based the system on a fantastic physics (akin to Alfred Jarry’s pataphysics) that lets the space grow, decay, compact, or expand on the basis of different actions it perceives.

The tight coupling between sensory input and environmental feedback shares motivation (and to a degree, methodology) with the “bottom-up” approach found in embodied robotics research. The slower, less direct coupling has more in common with biological or physical simulations. We delineate the regions of our investigation between these extremes.

In responsive environments people can express themselves through familiar actions and gestures, without needing to learn new interface metaphors. HCI occurs through an intricate network of sensing technologies, perceptual analysis, and evocative real-time media, targeted toward nonverbal and non-symbolic interaction with the environment and other participants. This interaction can include untrained, habitual movements such as touching, caressing, grabbing, bending, walking, and jumping. In a sense, the environment 'recycles' the residual energy of a body’s motion into a resource for media generation and output.

TGarden generative media dynamics diagram, Las Palmas, V2, Rotterdam, The Netherlands, 2001

When the environments are designed as semipermeable ‘skins’, the costumes, architectural elements, and media systems can suggest different types of movement (e.g. heavy, light, restricted, bouncy, spinning). As participants explore the materials, shapes, and regions that they find most compelling, the environment’s ambience and mood change accordingly. This real-time interaction can raise the participants’ awareness of their effect on the surroundings, and perhaps even stimulate a sense of interbeing. These are direct, embodied experiences of the complex entanglements between human and other agencies in a technologically driven world, where boundary conditions are already blurred, bleeding strange edge effects and oozing imaginary realities into the everyday.

Think what it would be to have a work conceived from outside the self. A work that would let us escape the limited perspective of the individual ego, not only to enter into selves like our own, but to give speech to that which has no language, to the bird perching on the edge of the gutter, to the tree in spring and the tree in fall, to stone, to cement, to plastic. Was this not perhaps what Ovid was aiming at when he wrote about the continuity of forms? And what Lucretius was aiming at when he identified himself with that nature common to each and every thing?Italo Calvino, Six Memos for the Next Millennium

txOom participants, The Hippodrome Circus, Great Yarmouth, UK, 2002

txOom participants, The Hippodrome Circus, Great Yarmouth, UK, 2002

🜛