Multiplex Translations | Entangled Aphasia

Imagining tools that enable deep interconnection, enhance complex relationships between components, and allow for more generative and evolving media communication, transformation and translation.

Including excerpts from the conference paper for the International Conference on Media Futures in Florence, Italy, 2001.

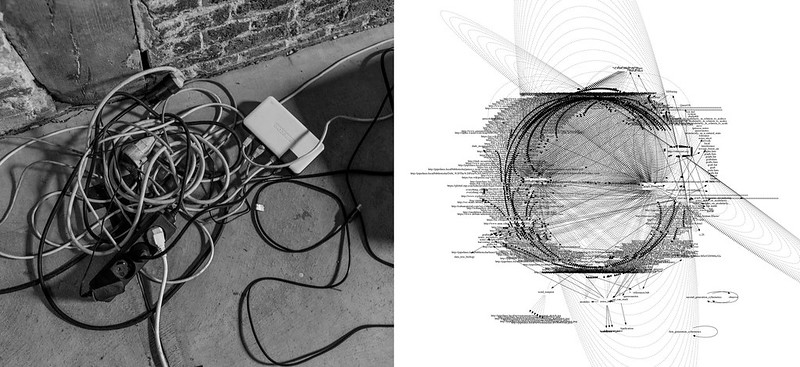

Promiscuous Pipe(line)s, Constant & FoAM, Brussels, Belgium, 2015

Promiscuous Pipe(line)s, Constant & FoAM, Brussels, Belgium, 2015

While many tools in the 2020s have much more robust support for importing and exporting between file formats compared to 2001, the deeper vision of tools that learn, remember, and disentangle complex information in smart ways is still largely unrealised. Most media tools are not yet "context-sensitive" or able to dynamically evolve their functionality to the degree proposed. Achieving tools with this level of adaptability and "understanding" remains an open challenge.

The future of media tools does not lie in developing more specialised tools for representation, but in supporting the emergence of tools that enable deep interconnection, that enhance complex relationships of multiple components or subsystems; and that allow a more generative or evolving media communication, transformation and translation.

One of the major problems with the current authoring and representation tools is their inability to translate between data-formats, protocols, interfaces and devices. The languages, systems and logic they are built upon do not allow for graceful degradation of their functionality. Graceful degradation is the capacity of complex systems to maintain (limited) functionality even when they are largely broken or decayed, had been destroyed or otherwise rendered inoperative. Graceful degradation can prevent catastrophic failure by utilising the remaining working components to adapt, reroute or reduce functionality. Think of the impact of cyber attacks on internet infrastructure, or supply chain issues (such as at the beginning of the Russian invasion of the Ukraine) on the the electrical grid, for example. If a particular data-format is not understood, there seem to be two categories of response: output gibberish or crash, both equally useless. Glitch Art and Error Engineering

A future media environment should be able to handle this problem in a more elegant manner. Such tools would focus on the processes that collate, relate and analyse media, enabling connections and providing constant operations on a range of media elements. They would be context sensitive, built upon extendable and flexible frameworks. They should be data driven, and handle the previously mentioned problem of unknown data-formats, devices or protocols through a negotiation/translation between the entity demanding information and a dynamic network of computational entities providing information, or providing suggestions for handling this particular type of information. The system should begin to learn and remember, disentangling itself from the current mess.

Ideally the intermedia tools would encompass multiple platforms, and be scalable from a text only interface to an immersive virtual environment. The media would be scaled or transformed by the environment in which they are experienced. The final media output should be the result of negotiations between internal rules associated with each element (or group of elements) and the external conditions/obstacles created by the environment.

Another aspect of scalability required is the customisation of the user interface to their particular needs/skills. Different types of media could be accessed and transformed through the same interface. For example, a developer comfortable with video editing systems, could edit sound, graphics, text and scripts through a video-editing interface; a sculptor could have a tool that allows them to model digital media as if it were a physical matter.

Furthermore, building coherent media environments that change over time requires tools and processes for dynamic, improvised composition. Instead of working with static representation systems, we might need to look at systems that can create associations, observe patterns or represent information more dynamically in a consistent manner.

Arranging information spatially has a long tradition of being particularly useful as an aid to memory. People are often able to fill their mind’s eye with images to enhance recall, or their mind’s ear with a particular rhythm or rhyme for reinforcement. These techniques allow us to do more than just remembering particular concepts, but also to relate, or associate these concepts by using similar methods.

Even a small amount of spatial structure can enable significantly larger increases in the knowledge about the structure of interrelated contents. High dimensional geometries can be quite easily represented in a computer, and if carefully structured, could contain a large amount of implicit information about the contents of the space. Manipulations and traversals of this geometry would be the computers' equivalent of ‘My Very Educated Mother Just Served Us Pistachio Nuts' (mnemonic for order of the nine planets).

Transforma[c]tion, Starlab, Brussels, Belgium, 2000

Transforma[c]tion, Starlab, Brussels, Belgium, 2000

A proposition: sutChwon (Subject to change without notice)

In the increasing number of international collaborative projects, most of the design, development and distribution tasks are communicated through local and global networks. These networks consist of a variety of systems or entities, and of interfaces that allow these systems to communicate with the human-readable reality. A range of possibly incompatible protocols, data formats and platforms can be expected. The networks can fluctuate, connections can be lost and new entities can enter the network. As this is becoming the standard condition of current practice with telecommunications technology, the development of techniques to deal gracefully with these problems is necessary.

SutChwon could become a framework for the construction of a flexible system for remote collaboration (CSCW/CSCD – computer supported collaborative work / design). This system could act as an interconnecting layer between several autonomous computational entities, and should allow effortless communication between platforms, protocols, data-formats and interfaces. Automated content and protocol negotiation are a central aspect of SutChwon, as is service discovery or learning. With the further integration of machine learning techniques, the system should be able to adapt to new and unknown computational environments it needs to operate within. In practice this means that production and representation of media would converge, resulting in tools that evolve through time and usage. SutChwon could enable open and adaptive models of communication and interaction within complex and multi-layered networked collaborations. Friendly Co-hauntings

🜛

The original "sutChwon" framework envisioned a flexible system for interconnecting diverse data sources, media types, and collaborative workflows. In 2025, we can see echoes of this vision in computational notebooks (such as Jupyter, Pluto or Clerk) and personal knowledge management tools (such as org-mode, Notion, or Obsidian), which allow users to create interconnected networks of notes, media, and ideas; supporting emergent organisation and discovery of relationships between information fragments. But while these tools succeed in interlinking human-readable content, they don't yet realise sutChwon's vision of generative interoperability and translation between arbitrary data formats and protocols. The burden still largely falls on users to manually import, structure, and relate information across sources.

Generative language models gesture to the potential for systems that can dynamically generate and manipulate media based on patterns inferred from vast datasets. Knowledge graphs and neural networks enable rich, evolving representations of concepts and relationships. Techniques like self-supervised learning and meta-learning suggest paths towards systems that can flexibly adapt to new domains and tasks without extensive manual fine-tuning. These approaches resonate with sutChwon's goals of graceful degradation and operation in unknown environments.

In 2025, we can also see hints of the document's vision of converging media production and representation in tools like Figma or Miro that enable real-time, multi-user creation/editing of graphics, documents, and interactive prototypes in the cloud (i.e. someone else’s computer) – tools that blur the lines between authoring and experiencing media. However, these (and similar) tools still rely heavily on direct human input and control, lacking an “understanding” of meaning and relationships within the media they operate on. The full realisation of intelligent, context-aware media environments that learn and evolve through use remains (for now) a work in progress...

🜛